Position: Research Intern

Oct 2019 - Jun 2020

Tech Stack: Java, ANTLR

About

As a Research Intern, I was working alongside Anshuman Dhuliya, a PhD student at IIT Bombay, on his project Synergistic Program ANalyzer (SPAN). My role was to contribute to the Front-end of SPAN - to create a source-to-source compiler that takes a high-level specification as input and transpiles it into its Python equivalent.

Problem

SPAN required data flow analyses as an input and in Python language to apply those optimizations to an input program. There are several ways to specify a data flow analysis but SPAN wanted them to be in a specific format and a way for the end users to be able to write them in an intuitive way.

Goal

To create a domain-specific language that would enable users to specify data flow analyses in an intuitive way, and to transpile the input to its Python equivalent.

Process

- Devising the domain specific language - specDFA

-

Understanding lexers and parsers

To gain a better understanding of lexers and parsers, I familiarized myself with Flex and Bison by creating a JSON parser. -

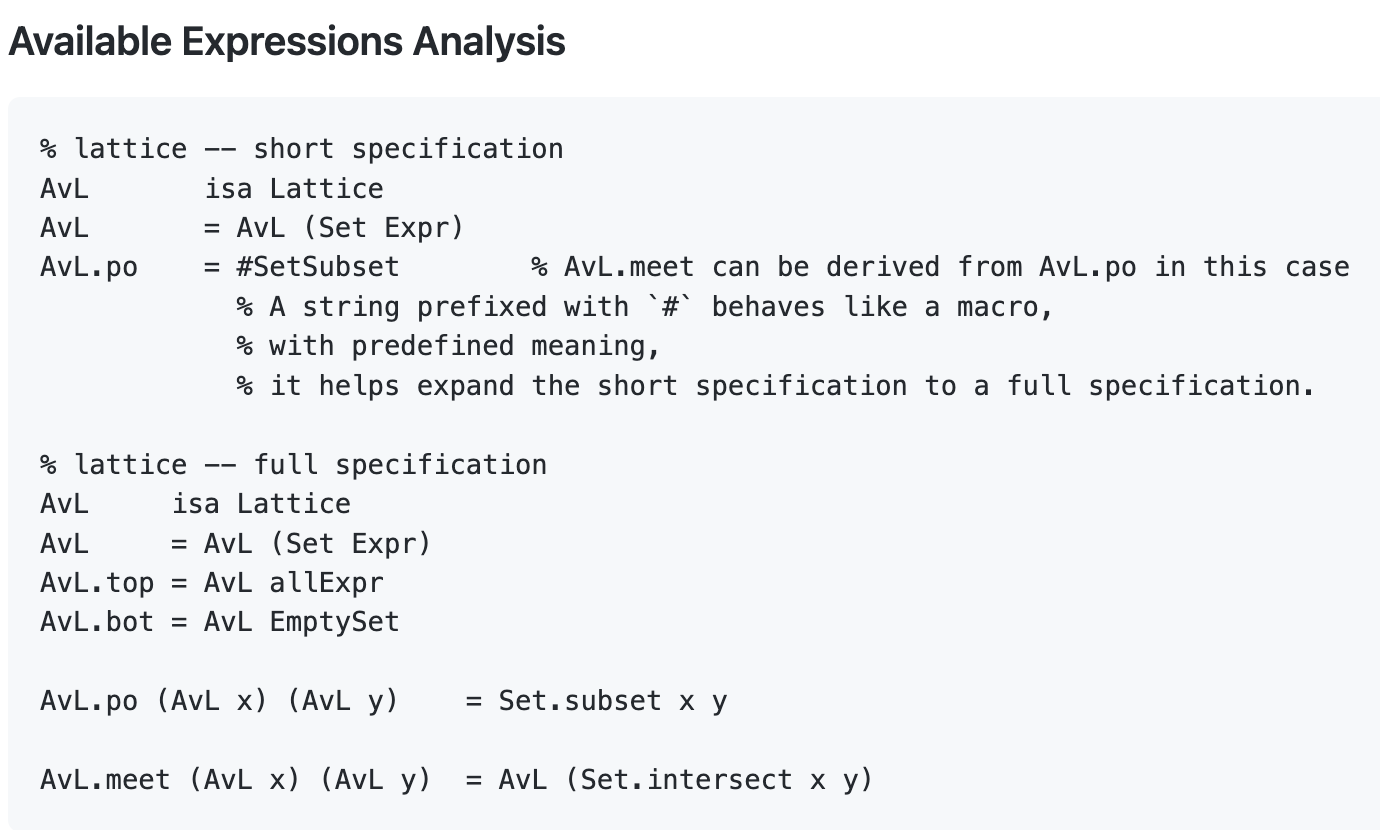

Understanding data flow analysis

This was one of the most important steps as it would determine the syntax of the language to be developed. I researched about and understood the different type of analyses such as Available Expression, Constant Propagation, and Live Range Analysis -

Deciding upon the language syntax

I acquainted myself with Haskell’s syntax to adopt it for specDFA. After several iterations, specDFA’s syntax was agreed upon.

- Converting specDFA to Python

-

Building the Abstract Syntax Tree (AST)

After exploring a few options, I usedANTLRto build the AST as it provides a convenient way to build and walk the parse tree. While walking the parse tree, I store all the required context at every step and build the AST required for the code generation step. -

Walking the AST and emitting Python code

I used the builder pattern to emit the equivalent Python code. Since the AST stores all the required information, this step simply involved walking the AST and string formatting the context to generate the Python code

class AvL(types.Lattice):

def __init__(self, func: obj.Func, val: Optional[set[types.Expr]] = None, top: bool = False, bot: bool = False):

super().__init__(func, val, top, bot)

def meet(self, other):

return set.intersect(self.val, other.val)

def __lt__(self, other):

return set.issubset(self.val, other.val)

What I learned

- I learned how to work on a project where the requirements are vague and changed often

- It helped me improve my research skills - I read multiple research papers and books to learn about data flow analyses and static program analyzers

- It introduced me to the back-end of compilers

- I learned about the Builder and Visitor design patterns